Speech Recognition Plugin

| Name | Speech Recognition Plugin |

| Category | Input |

| Author | ShaneC |

SphinxUE4: Speech Recognition Plugin

Contents

Help support by donating

I wish to keep this plugin freely available to anyone, for whatever use they have, and if possible, help them with that goal.

I have spent quite a lot of time working on the plugin, and helping others get it working.

If you feel the plugin has been helpful, a PayPal donation to shane.colbert@gmail.com would mean the world. Thank you!!!

Sphinx-UE4

IMPORTANT NOTES:

- Blueprint Only projects will not package properly with Plugins. This is a known issue of Unreal Engine for the time being. To work around this, simply add an empty C++ class to your Blueprint Only project.

- When packaging a project. Ensure the model folder is included in 'Additional non-asset directories to Copy' (in Project Settings=>Packaging.

Sphinx-UE4 is a speech recognition plugin for Unreal Engine 4. The plugin makes use of the Pocket-sphinx library. At the moment, this plugin should be used to detect phrases. (eg. "open browser"). Singular words recognition is poor. I am looking at ways to improve this to a passable level.

http://cmusphinx.sourceforge.net

Demo Project

The following are links to a demo projects for Windows, and Mac (previous version, for the moment).

Mac (4.12): https://github.com/shanecolb/sphinx-ue4/releases/download/1.1/Sphinx_UE4_Test_MacOSX.zip

Windows: (4.16) https://drive.google.com/open?id=0BxR5qe2wdwSLRWU3cFhCSGRqcDA

Windows: (4.17) https://drive.google.com/open?id=1FPxlq1FLEY2Y51F3KnDPNb4UVgQeyMLZ

Windows: (4.18) https://drive.google.com/open?id=1_UPQg3wj9DEf8nbkUZDc3oDbfsLBX4rs

The project includes a few example maps. Each showing a different mode of detection/recognition.

Maps

- GameExample:

Speech can control a character, moving them around a soccerfield, walking/running, turning and kicking the ball. <youtube>https://www.youtube.com/watch?v=b6vd0pWSGKU</youtube>

Rules

The game timer is set at 3 minutes. A ball will spawn, of a random colour (either blue or red). A goal is obtained, by kicking the ball into the colour that matches the ball. When a goal is scored, a new ball is spawned.

How to play

When the game starts, the game will be setup in a keyword listening mode.

start the game: Starts a game of soccer.

enable walk: The character starts walking.

enable sprint: The character starts sprinting

turn left: Rotates the character 45 degrees to the left.

turn right: Rotates the character 45 degrees to the right.

kick the ball: If a ball is in range, the player kicks the ball.

one eighty: Rotates the character 180 degrees.

stop movement: Stops all character movement.

- GrammarTest:

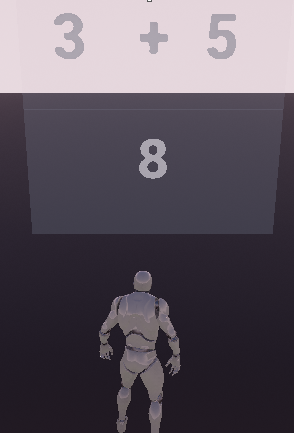

This example shows the grammar file support. The grammar file has the form <digit> <operation> <digit>. Upon a recognition that matches the form, it will show the operation, and the result. For example "three add five", will look like this

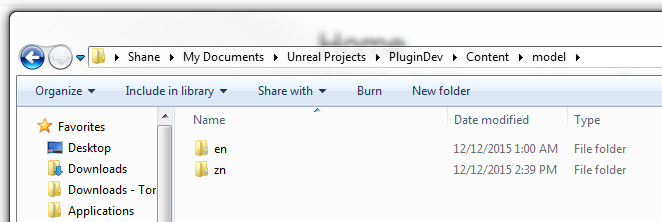

- Language Test:

If you click on the character in the map, you can see that Language is selectable property. There's support for English, Chinese, French, Spanish, Russian. Take a look at the blueprint to see what words are added for what languages. I can only speak English, so the testing of the foreign languages is probably pretty blotchy.

- Volume Test:

An approximate volume of the microphone can be obtained in blueprints. In this example, the cone radius of the light is effected by the volume of the microphone.

How to use the Plugin in your project

- Download the demo project (for your respective OS/UE version).

- Copy from the project, the "Content/model" and "Plugins" folders, to your new project.

- Right click on the .uproject file, and select regenerate solution.

- Open the visual studio project, recompile, and open the project in UE.

- Open the project, and enable the Speech Recognition plugin.

- Open the blueprint of whichever actor/class you wish to improve, by adding Speech Recognition Functionality.

I will now run through the changes necessary:

Blueprint Changes

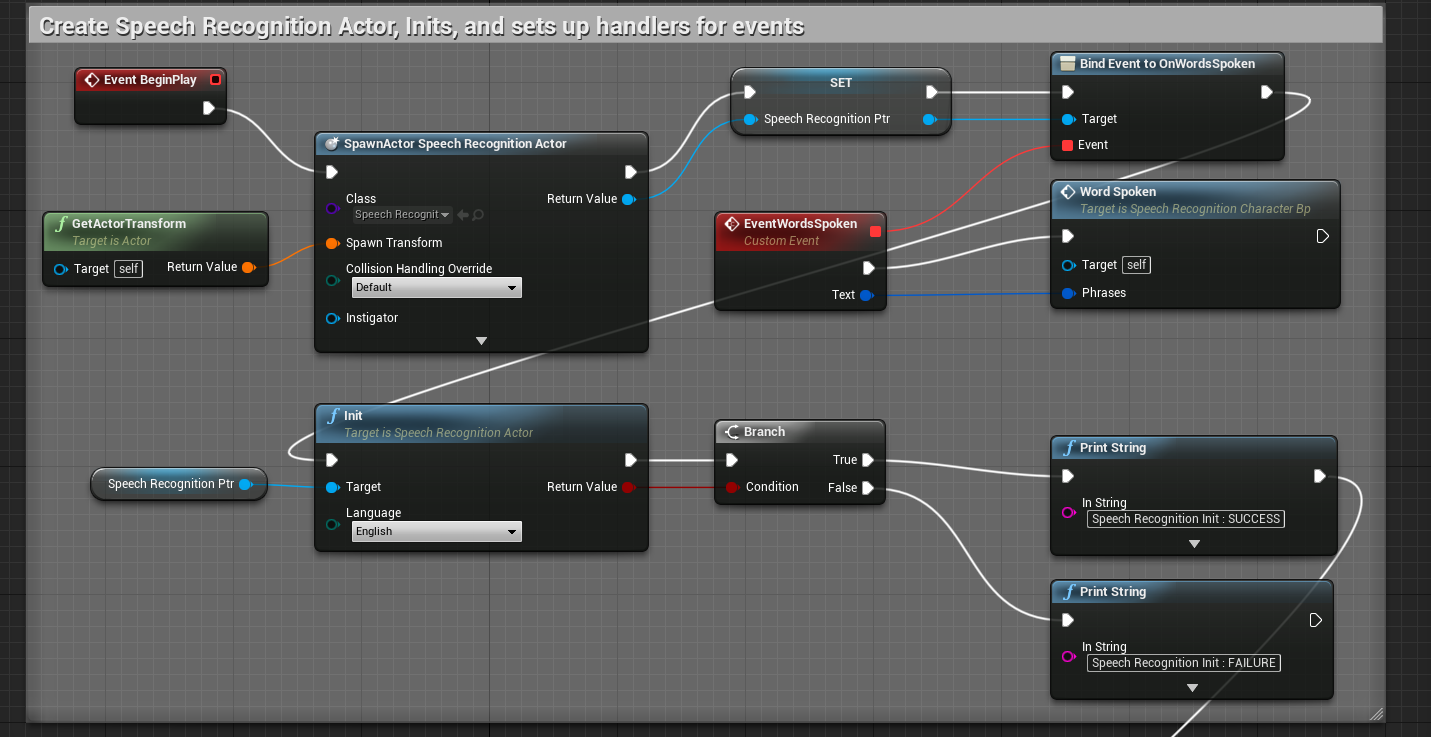

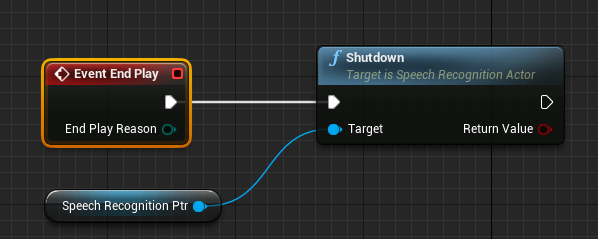

1) When the Begin Game event is fired, create a Speech Recognition actor, and save a reference to this actor. After this, create and bind a method to OnWordSpoken. This method is triggered each time a recognised phrase is spoken. Lastly, ensure Shutdown (on the speech recognition actor) is called during End Play.

2) Once the actor has been created, we will Initialise, and set configuration parameters for Sphinx.

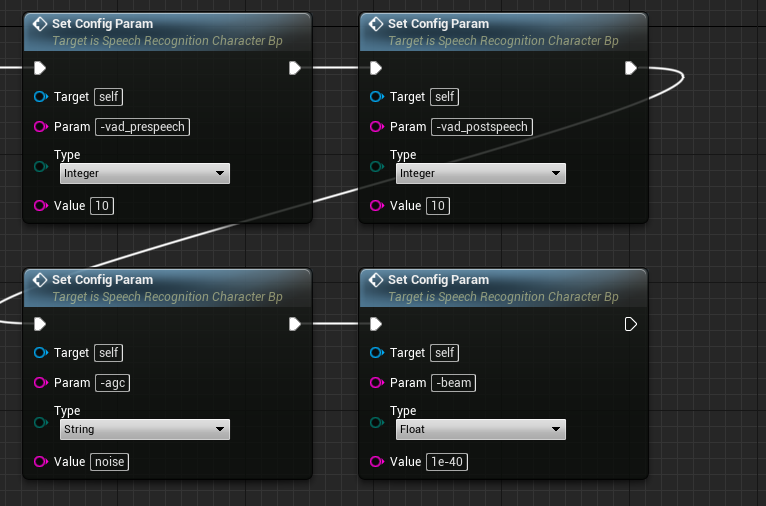

There is a huge range of sphinx params that can be configured.

NOTE: Setting the recognition mode (keyword/grammar) will reset the Sphinx params that were previously added.

Ensure sphinx config params are set, before each change of the recognition mode.

Although this list is old, the following provides a detailed list of the various sphinx params.

https://github.com/watsonbox/pocketsphinx-ruby/wiki/Default-Pocketsphinx-Configuration

At the moment, I set the following, and would suggest trying the same:

I am still experimenting to try and find what works best for me.

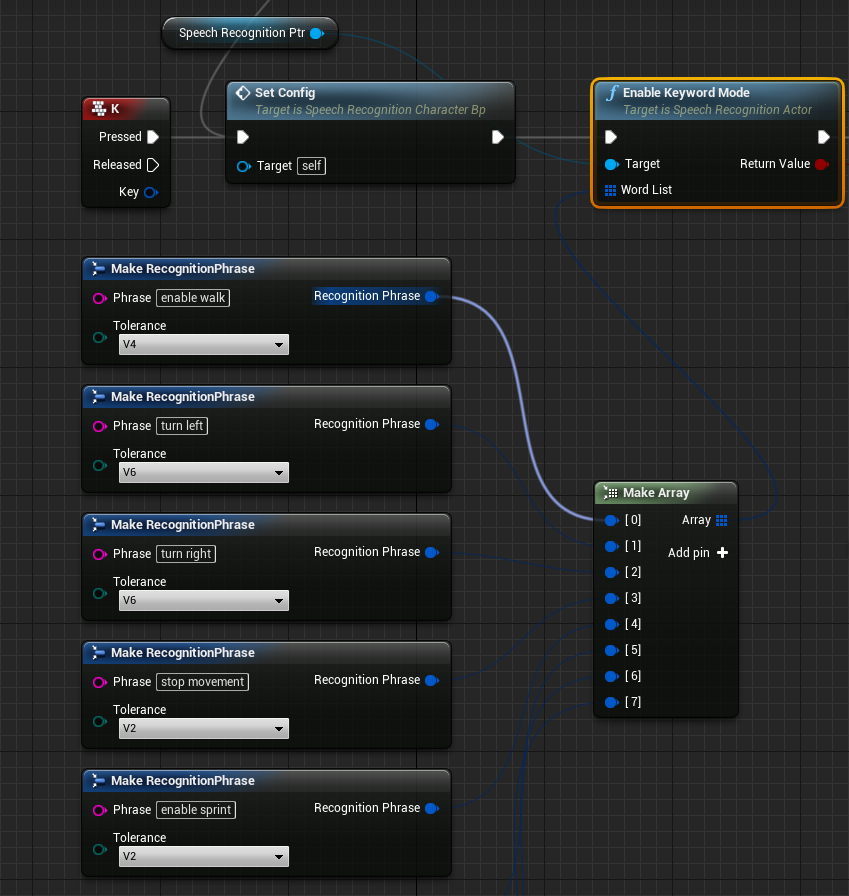

3) At this time, we set the recognition mode to Keyword, and a set of Key phrases are passed in.

These are used to determine which phrases are spoken by the player.

A Recognition Phrase comprises a string (representing the phrase we wish to detect) and a tolerance setting.

This tolerance determines how easily a phrase will trigger.

Play around with the tolerance settings, to test the balance between sensitivity, and false positives.

If your phrase features words which are not in the dictionary, they will not be detected. To add words to the dictionary, open the .dict file that matches the language of your choosing (eg. English is "Content\model\en\en.dict").

This contains a list of recognised words. The first string is the recognised word. The rest is the phonetics of how the word is recognised.

Here are some examples:

abbott AE B AH T

ball B AO L

bandit B AE N D AH T

Simply add a word in a similar manner, and re-save the file.

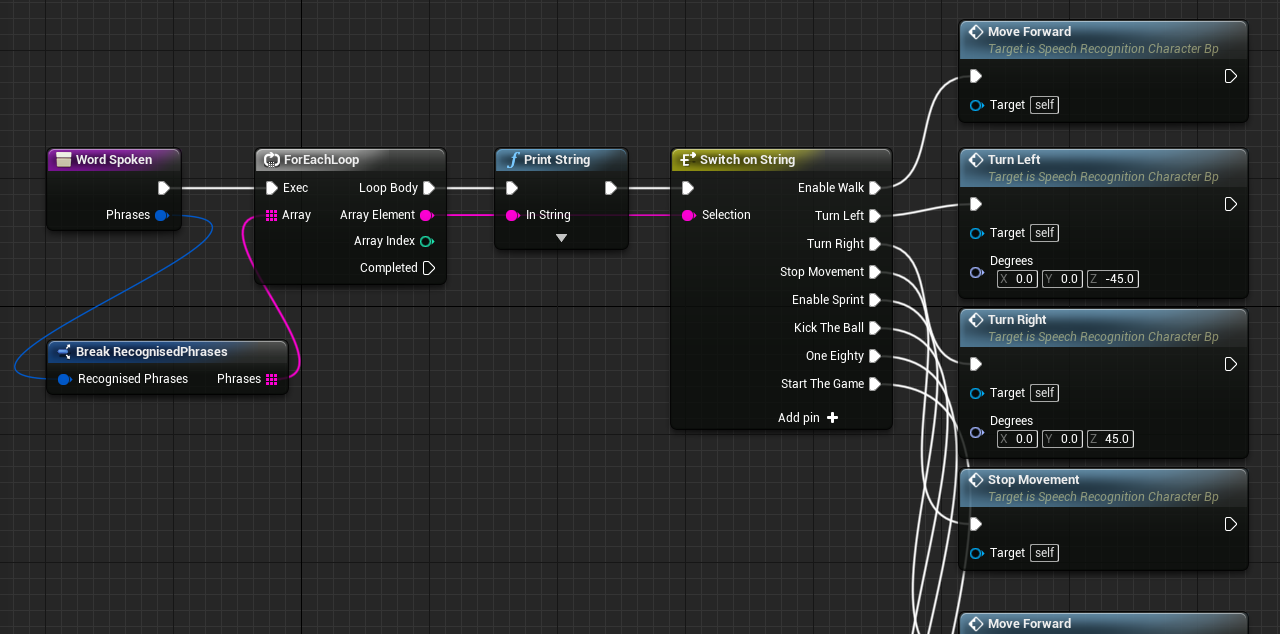

4) The WordSpoken method takes in an array of recognised phrases. This set is looped over, to trigger in-game logic.

IMPORTANT 5) Make sure on the End Play event, that the shutdown method is called. Otherwise, crashes will occur if multiple instances start up.

Speech Recognition Events

Just as there is an event fired when speech is detected, there are hooks for other events. Here is a list of all of the events

- OnWordsSpoken: triggered when silence is broken, and one or more recognised phrases are detected.

- OnUnknownPhrase: triggered when silence is broken, and no recognised phrases are detected.

- OnStartedSpeaking: triggered when silence is broken, and speech is detected.

- OnStoppedSpeaking: triggered when speech is broken, by silence.

Plans ahead

- Improve the accuracy

- Add in additional components of recognised speech, into a structure that can be interrogated.

For example, was the phrase "turn left" spoken softly, or loudly....or over how long was it spoken.

- Long Term: Experiment with acoustic model adaption.

http://cmusphinx.sourceforge.net/wiki/tutorialadapt

Contact Information

If you have any suggestions or questions, I would love to hear them. Please feel free to e-mail me at shane.colbert@gmail.com. If you have any suggestions, or wish to help out, the project is on github.